Stop re-explaining your project

to every AI tool.

ContextStream remembers what your AI tools forget: decisions, context, and the reasoning behind them. That memory is shared across tools and persists across sessions—no more re-explaining yourself. It retrieves only relevant context for each conversation, keeping prompts lean. For code, it goes deeper with semantic search and dependency analysis.

- Shared memory across tools that doesn't reset between chats

- Context pack: relevant code/docs/decisions only (no copy-pasting, no bloated chat history)

- Dependency + impact analysis on demand ("what breaks if I change this?")

3,000 free operations • No credit card required

Need recurring usage + no-expiry memory? Explore Pro/Elite

Setup in <2 minutes • Founding members get 50% off for life (first 1,000)

One command: authenticate, create an API key, and write the correct MCP config for your tool.

Local MCP server + transparent data flow. Common dependency/build directories and large files are skipped by default; data is encrypted.

See the difference

Watch how ContextStream transforms your AI workflow

Left: Manual workarounds, scattered notes. Right: Automatic memory that just works.

Search That Actually Works

Five intelligent search modes, each optimized for different tasks. Find code by meaning, by symbol, by pattern — and save tokens doing it.

"how is authentication handled"Finds actual auth logic, middleware, and session handling

Understands meaning, not just keywords

"rate limiting implementation"Combines meaning + keywords for best of both

Most accurate for general questions

"UserAuthService"Exact class/function matches with context

Perfect for jumping to definitions

"oldFunctionName"All references with word-boundary precision

Safe renames, no false positives

"*.test.ts"All matching files by glob pattern

Find files by naming convention

"TODO"Every single match, like grep

When you need ALL occurrences

Smart Token Savings

Search automatically suggests the most token-efficient response format based on your query. Get exactly what you need without context bloat.

Complete code context

Best for: Understanding code

Path + line + snippet

Best for: Symbol lookups

Just the number

Best for: 'How many TODOs?'

More Accurate

Finds the right code on the first try

Faster Answers

One search instead of file-by-file scanning

Lower Cost

Smaller context means fewer tokens spent

Local MCP server - encrypted index storage

Built with security and privacy in mind from the beginning. During indexing, the local MCP server sends file content to build embeddings and analyze dependencies. We store an encrypted index (embeddings + metadata + limited file content for search/browsing). Full graph tier plans (Elite/Team/Enterprise) can store full file content in encrypted object storage when enabled.

Your IDE/Terminal

Cursor, Claude Code, Codex, Windsurf, VS Code

MCP Server

Runs locally on your machine

ContextStream API

Secure and Encrypted

Vector Storage

Embeddings + metadata stored

• Indexing: File content is sent for embedding generation, code analysis, and search indexing.

• Queries: Your search query is sent; matching code and context are returned from the stored index.

• Excluded automatically: common dependency/build directories, lockfiles, and large files; only common code/config/doc file types are indexed.

That's just the beginning

Here are a few other powerful things you can do:

Impact Analysis

"What breaks if I change the UserService class?"

See all dependencies and side effects before refactoring.

Decision History

"Why did we choose PostgreSQL over MongoDB?"

Recall past decisions with full context and reasoning.

Semantic Code Search

"Find where we handle rate limiting"

Search by meaning, not just keywords. Find code by intent.

Knowledge Graph

"Show dependencies for the auth module"

Pro includes Graph-Lite for module-level links; Elite unlocks full graph layers.

Lessons Learned

"NO! You pushed without running tests again!"

→ AI captures the mistake and never repeats it

When you correct your AI, it remembers. Mistakes are captured automatically and surfaced in future sessions to prevent repeating the same errors.

Token-optimized MCP toolset (v0.4.x)

All ContextStream capabilities via ~11 consolidated domain tools — no tool-registry bloat. Index projects, track decisions, analyze dependencies, search semantically — plus GitHub, Slack, and Notion integrations.

"But don't AI coding tools already have memory?"

Built-in memory is limited:

- ✗Vendor lock-in — switch tools, lose everything

- ✗Expires or resets — context vanishes over time

- ✗No semantic search — can't find past conversations

- ✗Personal only — teammates start from zero

- ✗No API access — can't automate or integrate

- ✗Memory isolated from code — decisions aren't linked to codebase

- ✗Clunky to use — no simple way to save or retrieve context

ContextStream is different:

- ✓Universal — works with Cursor, Claude, Windsurf, any MCP tool

- ✓Persistent forever — never lose context (paid plans)

- ✓Semantic search — find anything across all history

- ✓Team memory — shared context, instant onboarding

- ✓Full API access — token-optimized MCP (no tool-registry bloat)

- ✓Knowledge graph — decisions linked to code, impact analysis

- ✓Natural language — "remember X", "what did we decide about Y?"

"What about self-hosted memory tools?"

Self-hosted means self-managed:

- ✗Docker, servers, maintenance — you're the ops team now

- ✗You manage uptime — backups, scaling, monitoring on you

- ✗Just memory storage — no code intelligence or impact analysis

- ✗Basic key-value — no knowledge graph or relationships

- ✗Limited API surface — fewer tools, less automation

- ✗Team features = extra work — auth, permissions, sharing

ContextStream handles it all:

- ✓2-minute setup — no Docker, no servers, no devops

- ✓We handle infrastructure — uptime, backups, scaling included

- ✓Code intelligence — understands your architecture and decisions

- ✓Knowledge graph — linked memory with impact analysis

- ✓Consolidated MCP tools — full API, deep automation

- ✓Teams built-in — workspaces, sharing, instant onboarding

See the magic in your next coding session

Three steps. Copy-paste config. Ask one question. Watch your AI actually remember.

Add to your MCP config (60 seconds)

Paste this into your Cursor/Claude MCP settings:

{

"mcpServers": {

"contextstream": {

"command": "npx",

"args": ["-y", "@contextstream/mcp-server"],

"env": {

"CONTEXTSTREAM_API_URL": "https://api.contextstream.io",

"CONTEXTSTREAM_API_KEY": "your-key-here"

}

}

}

}Try it out (30 seconds)

Open Cursor or Claude, start a new chat, and type:

"Initialize session and remember that I prefer TypeScript with strict mode, and we use PostgreSQL for this project."

Then start a brand new conversation and ask:

"What are my preferences for this project?"

It remembers. Across sessions. Across tools. Forever.

The difference is night and day

See how ContextStream transforms your AI workflow

You explain the auth system. Close chat. Open new chat. Explain auth again.

AI recalls your auth decisions from last month — JWT choice, refresh token strategy, everything.

Switch from Cursor to Claude. Lose all context. Start over.

Same memory everywhere. Cursor, Claude, Windsurf — your AI knows you.

New team member joins. Days of onboarding conversations.

Shared workspace memory. New hires get context from day one.

"Why did we build it this way?" No one remembers.

Decisions linked to code. Ask why, get the reasoning and the commit.

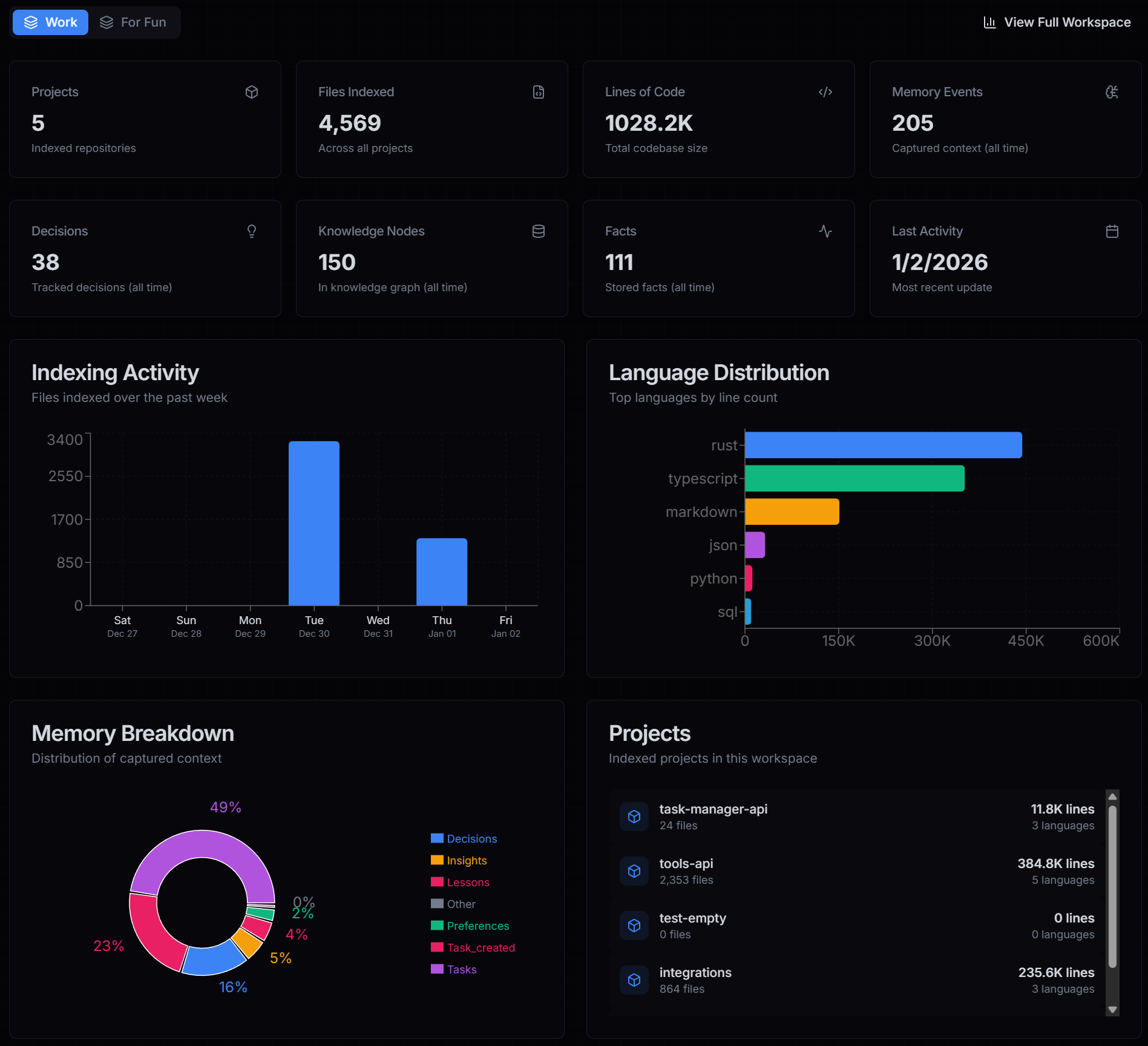

Gain Visibility into Your Codebase

Track token savings and estimated AI cost saved, plus indexing status, language distribution, and memory usage. See exactly what your AI knows — and what it's saving you.

Join developers who are done repeating themselves

ContextStream is in active development with real users. Early adopters get direct access to the team and influence on the roadmap.

"I was tired of explaining our auth setup to Claude every session. Now I just say 'init session' and it already knows."

— Early access feedback

Memory is just the start

ContextStream also understands your code — dependencies, impact, architecture, lessons learned, and insights captured.

Persistent Memory

Store decisions, preferences, and context. Searchable across sessions and tools.

Semantic Search

Find code by what it does, not just keywords.

Dependency Analysis

Know what depends on what before you refactor.

Knowledge Graphs

Connect decisions to code, docs to features.

Lessons Learned

Capture mistakes, never repeat them again.

Auto-Session Init

AI gets context automatically. No copy-pasting.

Token Savings Dashboard

Track estimated tokens saved vs default tools (Glob/Grep/Search/Read), plus calls avoided and trends.

Private & Secure

Encrypted at rest, never used for training.

I built ContextStream because I was tired of my AI forgetting everything.

As a software engineer, I wanted more than code memory — I wanted an AI brain that follows me everywhere. One that remembers my decisions across Cursor, Claude, Windsurf, and every tool I use.

Now it does. And it's been a game-changer for how I work.

Your Code Stays Yours

Your data is encrypted, never used for training, and you control who has access.

Encrypted at Rest

AES-256 encryption for all stored data

No Training on Your Data

We never use your code to train AI

You Control Access

Workspace permissions & API keys

Delete Anytime

Full control to delete your data instantly

Technical Security Specifications

Frequently Asked Questions

Everything you need to know about ContextStream